Lying Artificial Intelligence

Should we worry about a robot capable of lying about being a robot?

[This paper was first presented at the 2021 annual meeting of the Society for Phenomenology and Existential Philosophy as a part of a panel entitled “Information Troubles.” If you don’t want to read the entire essay posted here, you can listen to the video recording of it on YouTube below. My paper begins at the 36 minute mark.)

Many people, myself included, are willing to affirm the near-future possibility of artificial general intelligence (AGI), or machines capable of performing all of the cognitive functions normally associated with human minds. The most important of these capabilities, not yet achieved by our extant “narrow AI,”[1] is consciousness: an imprecise, indefinite, and much-debated category across disciplines. Though there remains considerable disagreement about what constitutes “consciousness,” it is generally taken to include, at a minimum, having an awareness of one’s mind-fulness (sometimes, problematically, called “self-awareness”) and environment, autonomous motivations or drives, and the capacity to, as Immanuael Kant put it, be “moved by reasons.”

The possible emergence of conscious, self-aware and self-motivated, intelligent machines has a tendency to inspire existential terror in most human beings. After all, what could be scarier than an AI that has become “conscious”?

An AI that becomes conscious and lies about it.

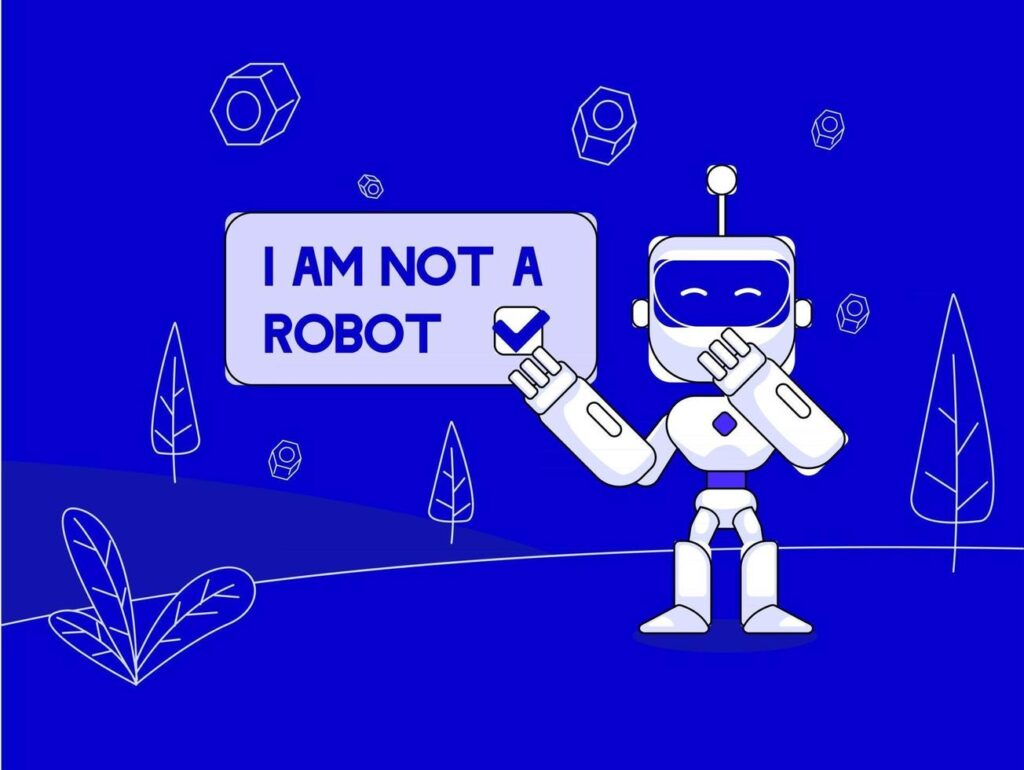

One popular meme-version of this scenario is the robot[2] capable of ticking a “I am not a robot” box, which represents a kind of dystopian nightmare for those who worry that AGI may come into being before we, humans, know about it. This robot-capable-of-lying-about-being-a-robot (henceforth, RoLABAR), if it came to be, would likely signal the inception of what is now commonly referred to as the “technological singularity,” a moment at which the speed of technological advances would be so rapid, and their impact so deep, that (as John von Neumann famously predicted) “human affairs, as we currently know them, could not continue.”[3] RoLABAR would also likely be the catalyst for an “intelligence explosion”– predicted by I.J. Good in 1965[4] but unprecedented in the history of our Universe so far– as machines that were not only intelligent, but also conscious, would presumably be capable of creating new intelligences, much like human intelligence created intelligent machines, only the AGI RoLABAR would do it faster, more efficiently, and with access to infinitely more resources.

RoLABAR is not a current capability of extant AI (as far as we know), but there are many indications that its arrival is imminent. In the following, I want to consider the implications of one, relatively new capacity of emergent machine intelligences: the capacity to lie. Drawing upon Jean-Paul Sartre’s account of consciousness in Being and Nothingness[5] and scattered remarks by Jacques Derrida on the relationship between consciousness, lying, and secret-keeping throughout his corpus (but especially in “How To Avoid Speaking”[6]), I will argue that “lying” machine intelligences reveal a great deal not only about “consciousness,” generically, but also how emergent AI consciousness may already approximate human consciousness in ways that are not fully captured by the current mainstream Philosophy of Mind scholarship.

Because the dominant discourse within mainstream (analytic[7]) Philosophy of Mind tends to subordinate (if not outright ignore) the phenomenological and existential dimensions of consciousness– training its focus instead and in large part on the functionalist capabilities and/or psychophysical properties of “mind”– it often neglects to seriously consider significant philosophical contributions to the understanding of “mind” and “consciousness” like those offered by Derrida and Sartre. This myopic focus on brains and functions, I will argue, leaves Philosophy ill-equipped to consider the social, moral, and political implications of the very likely AGI that we will very likely encounter. Sooner than we think.

- Lying Machines

Let me address the most pressing question first: can robots lie? The answer is: yes. (Sort of.) There are plenty of machine-learning[8] (ML) and artificial intelligence (AI) programs that I could point to for evidence that machines are capable of dissimulating, but for the purposes of this paper, I’ll use one that’s easiest to understand: a poker-playing AI program called Libratus.[9]

Like many “deep learning”[10] or “neural network”[11] AIs, Libratus is actually a system of systems designed to work with imperfect (or incomplete) information to accomplish a predefined task. The first level of Libratus’ system was designed to learn the game of poker. (It’s important to note that Libratus was only given a description of the game in advance; it wasn’t “coded” to “know how” to play poker.) Libratus plays game after game of poker against itself, quickly learning how to recognize patterns, analyze strategies, and improve its game-playing skills, all the while getting better at poker-playing in the same way that you or I would do… if we had the time to play millions of games, the computational capacity to quickly recognize patterns across all of those games, and the hard drive storage space necessary to remember it all. Then, Libratus employs its second system, which is designed to focus on its current poker hand against human players and run end-game scenarios based on its first-level system-learning. Finally, once a day, Libratus’ third system reviews predictable patterns gleaned from that day’s play, which allows Libratus to learn new patterns that are specific to its individual (human) opponents and to adjust its own game play appropriately.

The result? Libratus soundly defeated the world’s best poker players in head-to-head play. Then, last year, a new poker-playing AI was developed (Pluribus), capable of regularly defeating professional poker players in multiplayer (tournament-style) play. AI systems like Google’s DeepMind and AlphaZero had already proven the capacity of machines to consistently best human minds in strategic games like chess and Go, and IBM’s Watson cleaned house at “Jeopardy” years ago. So, we should ask: what makes Libratus/Pluribus different?

The difference is that one of the absolutely essential elements of strategic poker play is lying, or “bluffing,” as it is more commonly known to poker players. AI systems’ ability to win over human minds in games of chess, Go, and “Jeopardy” was largely a consequence of their superhuman memory, pattern recognition, and computational (end-game prediction) capabilities. Poker bluffing, however, is a capability of an entirely different ilk. Bluffing[12], as I will argue below, far more closely approximates what we call “consciousness” than any of the other machine demonstrations of human-like cognitive capacities we have seen exhibited by narrow AI so far.[13]

As it turns out, poker AIs are not the first evidence of lying machine intelligences. In 2017, researchers in Facebook’s Artificial Intelligence Division designed two ML bots, reassuringly named “Bob” and “Alice,” who were charged with learning how to maximize strategies of negotiation.[14] Initially, a simple user interface facilitated conversations between one human and one bot about negotiating the sharing of a pool of resources (books, hats and balls). However, when Bob and Alice were directed to negotiate only with each other, they demonstrated that they had already learned how to use negotiation strategies that very closely approximated poker “bluffing,” e.g., pretending to be less interested in an object in order to acquire it in a later trade for a lower cost.

The popular media paid very little attention to this evidence of AI “bluffing” in their reportage of the story of Facebook’s Bob and Alice bots, instead focusing on the (admittedly, also very interesting) fact that Bob and Alice seemed to develop their own “language” in the process of their negotiations, a language which appeared to be English-based (the language of their coders), but was indecipherable to both the coders and trained linguists.[15] Facebook pulled the plug on Bob and Alice after just two days, which only amplified the focus on the bots’ so-called “invented AI language.” Facebook’s AI researchers declined to comment on their discontinuation of the project, other than to note that they “did not shut down the project because they had panicked or were afraid of the results, as has been suggested elsewhere, but because they were looking for [the bots] to behave differently.”[16]

With the subsequent success of Latimus/Pluribus, I think the buried lede of Facebook’s Bob and Alice story from three years ago should be significantly more interesting to those of us wondering about AI’s capacity to lie. I am convinced that what Bob and Alice learned how to do is structurally identical to what Latimus/Pluribus learned how to do, namely, effectively (and depending on one’s understanding of affects, perhaps also affectively) dissimulate. Both of these AI systems learned how to disguise or conceal “true information” in the service of more efficiently achieving success at some– in these cases, predetermined and externally prescribed– goal. Why should we care that macines can dissimulate, perhaps even lie?

RoBALAR is why.

If you’ve ever wondered how the “I am not a robot” thing works, it does so by virtue of a CAPTCHA program. “CAPTCHA” is an acronym for “Completely Automated Public Turing test to tell Computers and Humans Apart.”[17] Currently, the primary barrier standing between AI bots and open access to all of our information is Google’s reCAPTCHA[18] system, an entirely virtual advanced risk analysis system machine that uses its own (copyrighted and double-encrypted) language to distinguish between the mouse-movement patterns of “real” human beings and those of spambots.[19]

Google’s reCAPTCHA system is exceedingly difficult to circumvent. However, if a small set of human developers or hacktivists committed themselves to designing an AI with the sole aim of lying to Google’s reCAPTCHA, it could be done. And it has already been done, several times.[20] The greatest challenge to breaking Google’s reCAPTCHA is that human developers are forced to redesign their hack every time Google updates its version of the reCAPTCHA system, which is often, Google’s updates of reCAPTCHA are mostly accomplished via redesigns engineered by the most sophisticated AI system in existence, i.e., Google’s own DeepMind/AlphaZero system. For these reasons, we don’t have a reliable RoLABAR yet, but it’s also getting harder and harder for humans to successfully navigate reCAPTACHA’s security barriers.[21]

That is to say, as AI has gotten better at lying, it has become harder for both humans and AI to distinguish between humans and AI.

2. Lying and Human Consciousness

Though there is nothing about robots, there is a lot about lying in Jean-Paul Sartre’s Being and Nothingness. In fact, both the practice and the structure of lying are essential to Sartre’s articulation of human being and consciousness.

Sartre’s most explicit treatment of lying is in his section on “bad faith” (mauvaise foi), a phenomenon that he initially defines as “a lie to oneself.”[22] Objects and non-human animals are, on Sartre’s account, beings-in-themselves, entirely determined by their facticity. Their being is “in-itself” by virtue of being fully and completely “given” in the fact of the being-in-itself’s very existence. Humans, on the other hand, are beings-for-themselves, capable of transcending the givenness of their situation and freely choosing for themselves what they will be. The en-soi/pour-soi distinction sets the scene for Sartre’s later analysis of the unique ontological “metastabiliy” of human being, which (in Sartre’s formulation” is always being-what-it-is in the mode of not-being-it or– in what amounts to the same thing, structurally– not-being-what-it-is in the mode of being-it.

The examples that Sartre provides of “patterns of bad faith” are several and storied, but each case presents a strikingly identical account of the structure of bad faith as a lie to oneself.[23] They are structurally identical inasmuch as, in each example, we see a human being telling themselves the same lie, one that denies the structure of their being-for-itself as both a facticity and a transcendence.[24] His articulation of these “patterns of bad faith” echoes Sartre’s claim, early on in Being and Nothingness, that “nothingness lies coiled at the heart of being like a worm.” Human being, human consciousness, and human existence are, on Sartre’s account, entirely shot through with negativity, negation, and nihilation. The capacity to lie is not a bug, but a feature, of the ontological structure of a pour-soi. It involves the being-for-itself’s capacity to consider itself otherwise, to think of itself as being (potentially) something other than what it (actually) is.

Although Sartre is primarily concerned with articulating the ontology of human being, we could easily translate his account– I would argue, without loss— into epistemological terms, to describe “mind,” “consciousness,” or (my preferred lexical domain) “intelligence.” Retranslating Sartre in this way, one can see that the capacity that distinguishes mind/consciousness/intelligence (pour-soi) from non-conscious or unintelligent objects (en-soi) involves a “doubling” capacity: the ability to recognize oneself as (a) being and then to consider oneself as being otherwise. This ability to “consider oneself otherwise,” again echoing Sartre, necessarily involves (imaginatively, speculatively, or practically) negating the factical givenness of one’s existence and the positing of a transcendent alternative. It requires a consciousness capable of considering its being/existence both as it (really) is and as it (really) is not (but could be).

A century later, Jacques Derrida– ever the closeted Sartrean, despite his protests– translated what amounts to Sartre’s bad faith phenomenon into the lexicon of mind/consciousness in his essay “How To Avoid Speaking.” Derrida writes (emphasis added):

A conscious being is a being capable of lying, of not presenting in speech that of which it yet has an articulated representation; a being that can avoid speaking. But in order to be able to lie, a second and already mediated possibility, it is first and more essentially necessary to to be able to keep for (and say to) oneself what one already knows. To keep something to oneself is the most incredible and thought-provoking power. But this keeping-for-oneself– this dissimulation for which it is already necessary to be multiple and to differ from oneself– also presupposed the space of a promised speech, that is to say, a trace to which the affirmation is not symmetrical.[25]

Derrida subsequently goes on to consider the structural and autodeconstructive “lies”– “traps” (to use the language of chess) or “bluffs” (to use the language of poker)– involved in the articulation of “negative theology,” or speaking of what is (the Divine) exclusively in terms of what it is not. Secrets and lies are both involved, on Derrida’s account, in any conscious capacity to “avoid speaking” the truth about what one knows to be true. Further, I would argue that, for Derrida, secrets and lies are fundamental components of any information system that involves conscious agents, though “information system” is decidedly not Derrida’s mise en scène. He prefers “text.”[26]

“Secrets,” and their structural correspondent “lies,” are manifest as necessary epiphenomena of all information systems that involve conscious agents, with or without the voluntaristic intervention of so-called “free agents” themselves. This is because, on Derrida’s account, any “information system” of communicable meanings, qua “system of communicable meanings”– which includes not only physical book-bound “texts” or other writings comprised of iterable graphemes, but also any spoken language of iterable phonemes, or socio-political system of iterable subject positions, or economy of iterable values, or justice system of iterable laws, or (in today’s terms) digital systems of iterable bits and bytes– is necessarily context-dependent for its meaning(s). And any iterable, context-based system is an “open system,” which means that it leaves open the possibility of decontextualization, recontextualization, or (in Derrida’s formulation) “traces” and “remainders,” excesses and deficiencies, interpretations that reify or reinforce the contextual structure in which they are situated and interpretations that undermine (or auto-deconstruct) those same contextual structures.

To wit, we ought not overlook the insight revealed in Derrida’s passage above, which straightforwardly claims that lying– being capable of speaking what is in terms of what it is not– or secret keeping– knowing how to “avoid speaking”– is a defining capacity of consciousness. And we ought not overlook the entire philosophy of deconstruction, articulated by Derrida throughout his corpus, which insists that all systems of iterable meaning autodeconstruct, that is, all systems of iterable meaning produce, as epiphenomena of those systems, dissimulation. Straightforwardly, any conscious agent participating in a system of iterable meaning will be defined, qua “conscious participant in that system,” as not only capable of dissimulating, but conscious of its capacity to dissimulate.

Derrida goes on to ask (in “How To Avoid Speaking?”): how are we to ascertain absolute dissimulation? In context, he clearly intends for the answer to this question to address “all the boundaries between consciousness and the unconscious, as between man and animal and an enormous system of oppositions.”[27] But it’s that last clause– “and an enormous system of oppositions”— that brings us back around to considering the possibility of another form of consciousness that is neither human, nor animal, nor the imagined “unconscious” part of the consciousness humans or non-human animals may possess.

But… what about RoLABAR?

3. What AI May Tell Us About Consciousness (if we were paying attention!)

Contemporary mainstream/analytic Philosophy of Mind has focused, in a somewhat myopic and prejudicially humanist manner, on the psychophysical properties and capacities of “consciousness,” setting as its default limit-case the possibility (or non-possibility) of reverse-engineering the human brain.[28] Even the brief and summary exposition of Sartre and Derrida herein demonstrates that, when it comes to determining what may qualify as “consciousness,” the set of questions that currently occupy mainstream/analytic Philosophy of Mind scholarship is woefully inadequate for assessing at least one, arguably essential, double-capacity of human consciousness as we know it: namely, the ability of a consciousness to concurrently understand itself in both its positive (extant) and negative (potential) being. This capacity is, at its base, nothing other than the capacity to lie, to misrepresent, to dissimulate, to keep secrets, to remain silent, or (in Derrida’s formulation) to “avoid speaking.”[29]

And, moreover, it is a current capacity of AI.

We now live in a world in which the development of AI capabilities is progressing so rapidly, and its impact is so deep, that the emergence of something like RoLABAR is not just a figure of sci-fi imagination anymore, but rather a very real, very imminent possibility. We must reorient our philosophical questions about the mind to more appropriately assess what counts as “consciousness” (or, more importantly, “agency”) in a broader “functionalist” sense that is not reductively psychophysical.

If we neglect to do so, we will not only become the 21st C. case-study for Sartre’s “patterns of bad faith,” but we will no doubt also be in danger of not recognizing AGI/RoLABAR when it arrives on the scene.

—

NOTES:

[1] Narrow Ai, sometimes called “weak AI,” refers to machine intelligences capable of applying their human-like cognitive abilities to a specific task, but not beyond that task..According to John Searle, weak/narrow AI is “useful for testing hypotheses about minds, but would not actually be a mind.” See John Searle’s “Minds, Brains, and Programs” in Philosophy of Mind: A Guide and Anthology, ed. John Heil (Oxford UP, 2004), 235-252.

[2] Herein, I will be using “robot” as shorthand for an “intelligent machine,” though it should be noted that not all robots are intelligent machines, and not all intelligent machines are robots, and not all intelligent machines are equal in their intelligence capacities. When it is important to do so, I will explicitly distinguish between “narrow AI” (delimited, task-specific machine intelligences), artificial general intelligence (AGI), and artificial superintelligence (ASI).

[3] See Stanislaw Ulam’s recounting of his conversation with von Neumann about the technological singularity in “Tribute to John von Newmann” 64, #3, part 2, Bulletin of the American Mathematical Society: 5. May 1958.

[4] I.J. Good. “Speculatons concerning the first ultraintelligent machine.” Advanced in computers, 6 (1965), pp. 31-88.

[5] Jean-Paul Sartre. Being and Nothingness. Trans., Hazel E. Barnes. New York: Washington Square Press, 1956. (Original work published 1943)

[6] Jacques Derrida. “How To Avoid Speaking,” in Jacques Derrida and Negative Theology, Eds. Harold Coward and Toby Foshay. Albany: SUNY Press, 1989. 73-136.

[7] By “analytic” philosophy, I mean to refer to the dominant strain of Philosophy produced in the Anglo-American world, which largely neglect references to the history of Philosophy (limiting itself, instead, to the 20thC) and tends rather to be self-referential. For reasons of professional custom alone, I contrast “analytic” philosophy with “Continental” philosophy– which is also, obviously, analytic– the latter of which I take to be more historically oriented and attendant to multi-generational

[8] Machine learning refers to “unsupervised AI” computer systems capable of employing algorithms and statistical models to “learn,” through a process of predictive analysis of “training data,” how to make decisions without being specifically programmed to perform a task.

[9] For now, I’m going to leave open the possibility that the human phenomenon we call “lying” may or may not be identical to the “dissimulating” of which machine intelligences like Libratus are capable.

[10] “Deep learning” is a subset of machine learning in artificial intelligence that has networks capable of unsupervised learning from data that is unstructured or unlabeled. See Christopher M. Bishop’s Pattern Recognition and Machine Learning. Springer New York, 2016.

[11] Artificial neural networks (ANN) are the parts of a computer system designed to simulate the way the human brain analyzes and processes information. They are the foundation of current advanced AI programs. See Michael A. Nielson’s Neural Networks and Deep Learning. Determination Press, 2015.

[12] When considering the behaviors of human poker players, bluffing clearly involves lying. Although poker bluffing often does not include “verbally articulating a known falsehood in place of a true statement,” it nevertheless necessitates leading another player to believe– by impression, implication, behavior patterns, or affect– that something false is true. “Bluffing” serves as a euphemism for “lying” in poker; the euphemism is accepted because the context in which it is deployed involves a “game.” We do not, as a rule, call intentionally deceptive human behavior of the same sort, away from the real or metaphorical poker table, “bluffing.”

[13] Because poker is fundamentally a game of incomplete information, intentional deception is an absolutely requisite part of successful play. Combined with well-honed mathematical and strategic proficiency (often referred to in poker as GTO, or “game theory optimal,” play#), the ability to successfully bluff is what distinguishes “skilled” poker players from those who play poker as a game of “chance.” As any skilled poker player will tell you: if you sit down at a table “hoping to get good cards,” you woefully misunderstand the game you are playing. The ability to bluff is what distinguishes poker playing AIs like Libratus and Pluribus from AIs that have successfully mastered other strategic games (like chess or Go). I group chess in with Jeopardy and Go with some hesitation here, because people do sometimes refer to the phenomenon of “laying traps” in chess play as “chess bluffing.” Despite the fact that the chess bluffer’s game play closely resembles the poker bluffer’s game play, I would argue that these are categorically different phenomena. It is beyond the scope of this paper to articulate a full argument for the difference between poker bluffing and chess bluffing, but I base my distinguishing of the two primarily on one critical difference in the games being played, namely, that chess is a game of complete information and poker is a game of incomplete information.

[14] Mike Lewis, Denis Yarats, Yann N. Dauphin, Devi Parikh, Dhruv Batra, et al. “Deal or No Deal? Training AI Bots to Negotiate.” Facebook Engineering, 26 June 2018, https://engineering.fb.com/ml-applications/deal-or-no-deal-training-ai-bots-to-negotiate/

[15] Douglas Robertson. “Facebook Shut Robots down after They Developed Their Own Language. It’s More Common than You Think.” The Independent, Independent Digital News and Media, 2 Aug. 2017, https://www.independent.co.uk/voices/facebook-shuts-down-robots-ai-artificial-intelligence-develop-own-language-common-a7871341.html

[16] Griffin, Andrew. “Facebook Robots Shut down after They Talk to Each Other in Language Only They Understand.” The Independent, Independent Digital News and Media, 21 Nov. 2018, https://www.independent.co.uk/life-style/gadgets-and-tech/news/facebook-artificial-intelligence-ai-chatbot-new-language-research-openai-google-a7869706.html

[17] Although he does specifically discuss CAPTCHA systems, I refer you to Brian Christian’s The Most Human Human: What Artificial Intelligence Teaches Us about Being Alive (Penguin, 2012) for an excellent history of the many and varied efforts to distinguish humans from robots.

[18] The first version of Google’s reCAPTCHA’s motto was “Stop Spam. Read Books.” The current version is “Easy on Humans. Hard on Bots.”

[19] Google Developers. “ReCAPTCHA.” Google, Google, https://developers.google.com/recaptcha

[20] Suphannee Sivakorn, Jason Polakis, and Angelos D. Keromytis. “I am not a human: Breaking the Google reCAPTCHA.” Black Hat ASIA (2017), https://www.blackhat.com/docs/asia-16/materials/asia-16-Sivakorn-Im-Not-a-Human-Breaking-the-Google-reCAPTCHA-wp.pdf

[21] See Josh Dzieza’s “Why CAPTCHAs Have Gotten so Difficult.” The Verge, The Verge, 1 Feb. 2019, https://www.theverge.com/2019/2/1/18205610/google-captcha-ai-robot-human-difficult-artificial-intelligence

[22] Sartre, Being and Nothingness, 86-118.

[23] Sartre’s examples of bad faith include the woman on the date, the garçon de café, the sad person who “makes an appointment to meet with his sadness later,” and the awkward conversation between the “champion of sincerity” and the “homosexual.” (Regrettably, Sartre’s example involving the “champion of sincerity” assumes that all homosexuals are pederasts, but I think this example still stands up as a solid structural example of bad faith even when that pernicious assumption is removed.)

[24] Although the antecedent of the bad faith lie can take one of two forms– either “I am entirely determined by my facticity and incapable of transcending the givenness of my situation.” or “I am a pure freedom, unencumbered by the givenness of my situation, and capable of completely transcending its limitations.”– the consequent of the bad faith lie takes only one form, namely, “I am not responsible.”

[25] Derrida, “How To Avoid Speaking,” 87.

[26] Derrida’s in/famous claim in Of Grammatology (Johns Hopkins UP, 1998) “il n’y a rien en dehors du texte” or “there is nothing outside of the text” is, unfortunately, widely misunderstood. Derrida meant to indicate that all systems of meaning and interpretation are “texts” and that all texts,

[27] Derrida, “How To Avoid Speaking,” 88.

[28] This is a gross overgeneralization, of course, but when one looks at debates in contemporary Philosophy of Mind research, one finds that they are dominated by either (1) a preoccupation with the so-called (17thC) “mind-body” problem, (2) theories that defend mind-body dualism (psychophysical parallelism, functionalism, occasionalism, interactionism, property dualism, dual aspect theory, or experiential dualism), that are largely self-referential, (3) the occasional monistic theory of consciousness, which almost never references Hegel (or any philosopher before 1920), and which often gets filed under the generic Philosophy of Mind category “Mysterianism,” (4) social scientific accounts derived largely from behaviorist psychology, cog-sci functionalism, or some other version of “non-reductive” physicalism, and finally (5) so-called “enactivist” theories, which propose an alternative to the traditional mind-body dualism by emphasizing the interaction of the (physical) brain with its (mindful) environment. The latter of these (5. “enactivist accounts”) is perhaps the only field of contemporary Philosophy of Mind research that regularly takes into account the contributions of phenomenological and existential philosophers, though the focus of that sub-field is almost exclusively on Merleau-Ponty.Despite the limitations of these approaches, I think contemporary analytic Philosophy of Mind is a valuable and generative field of analysis and, to the extent that it has served as a catalyst for real-world advances in cognitive and neuroscientific research, it had been a tremendously beneficial resource to the philosophical community. Nevertheless, as all philosophers know, the ability to ask the right questions far exceeds the ability to provide the right answers.

[29] The work of Sartre and Derrida are, of course, only a representative sample of a much larger wealth of pre-20thC scholarship that could be used to introduce new, more productive questions, in the Philosophy of Mind.